Since the corruption challenge completed yesterday, I have had several request asking how I created the corrupt database. So here is the script that I used to create the Database Corruption Challenge 1.

First the initial setup. Most of this I stole from a query training session that I did several weeks ago. All I really needed was a table with some data in it.

CREATE DATABASE [CorruptionChallenge1];

GO

USE [CorruptionChallenge1];

CREATE TABLE Revenue

(

[id] INTEGER IDENTITY,

[DepartmentID] INTEGER,

[Revenue] INTEGER,

[Year] INTEGER,

[Notes] VARCHAR(300)

);

INSERT INTO Revenue ([DepartmentID], [Revenue], [Year])

VALUES (1,10030,1998),(2,20000,1998),(3,40000,1998),

(1,20000,1999),(2,600400,1999),(3,500400,1999),

(1,40050,2000),(2,400300,2000),(3,604000,2000),

(1,30000,2001),(2,30000,2001),(3,703000,2001),

(1,90000,2002),(2,200200,2002),(3,80000,2002),

(1,10300,2003),(2,1000,2003), (3,900300,2003),

(1,10000,2004),(2,10000,2004),(3,100300,2004),

(1,208000,2005),(2,200200,2005),(3,203000,2005),

(1,40000,2006),(2,30000,2006),(3,300300,2006),

(1,709000,2007),(2,40000,2007),(3,400300,2007),

(1,50000,2008),(2,50000,2008),(3,500300,2008),

(1,20000,2009),(2,600030,2009),(3,600300,2009),

(1,300700,2010),(2,70000,2010),(3,700300,2010),

(1,80000,2011),(2,80000,2011),(3,800200,2011),

(1,100030,2012),(2,90000,2012),(3,900300,2012),

(1,10000,2013),(2,90000,2013),(3,900100,2013),

(1,100400,2014),(2,900300,2014),(3,903000,2014),

(1,102000,2015),(2,902000,2015),(3,902000,2015);

UPDATE Revenue SET [Notes] = CAST(NEWID() as VARCHAR(300)) + 'This is some varchar data just to fil out some pages... data pages are only 8k, therefore the more we fill up in each page, the more pages this table will flow into, thus simulating a larger table for the corruption example';

CREATE CLUSTERED INDEX [clustId] ON [dbo].[Revenue]

(

[id] ASC

);

CREATE NONCLUSTERED INDEX [ncDeptIdYear] ON [dbo].[Revenue]

(

[DepartmentID] ASC,

[Revenue] ASC

);

CREATE NONCLUSTERED INDEX [ncBadNameForAnIndex] ON [dbo].[Revenue]

(

[Year] ASC

)

INCLUDE ( [Notes]) ;

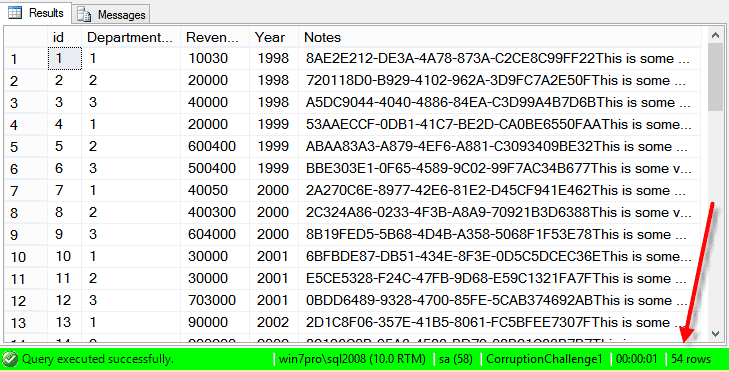

-- first lets look at the REVENUE table

SELECT *

FROM Revenue;

Read More »Corruption Challenge 1 – how I corrupted the database