Building a SQL Restore Script When Your Backup Runs

It is handy to have a SQL Restore script ready to go for every backup that you run. Here is a script that will help… Read More »Building a SQL Restore Script When Your Backup Runs

It is handy to have a SQL Restore script ready to go for every backup that you run. Here is a script that will help… Read More »Building a SQL Restore Script When Your Backup Runs

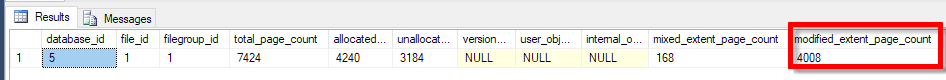

SQL Server 2017 introduces a new column in the sys.dm_db_file_space_usage system table. The column is modified_extent_page_count, which tells how many pages have been modified in your data file since the last full backup.

SELECT * FROM sys.dm_db_file_space_usage;

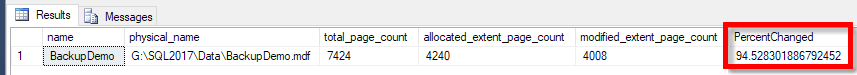

What is really interesting about this is that with a little math you can calculate the percentage of your data file that has been modified. This would be useful when running differential backups to be able to determine if it would make more sense to run a full backup or a differential backup. Basically when your differential backup gets large enough, based on the number of modified_extent_page_count pages, then it may make sense to do a full backup and reset this counter, and get back to smaller differential backups.

Here is an example

SELECT df.name, df.physical_name, total_page_count, allocated_extent_page_count, modified_extent_page_count, 100.0 * modified_extent_page_count / allocated_extent_page_count as PercentChanged FROM sys.dm_db_file_space_usage fsu INNER JOIN sys.database_files df on df.file_id = fsu.file_id;

Now based on the Percent Changed column we can make some assumptions on the size of the differential backup, and decide if we want to do a differential backup or a full backup.

Read More »Determining how much of your data file has been modified

From time to time I get asked about checkDB, and there are many solutions out there, but I have one that I generally use that is very simple and does the job.

The script below created a stored procedure in the DBHealthHistory database that can be used to check as many databases as you can get through in a specific time interval. Here it is set to 5 minutes, but that usually gets extended for large databases. If you set the job to daily, and the job doesn’t get through checking all the databases today, it will pick up where it left off and check the rest tomorrow.

Depending on the number and size of your databases you may want to run this more than once a day, or for a longer period than the 5 minutes.

Note: the 5 minute limitation is checked before starting the next check, so if you have a database that takes hours to check, that will kick off in the 5 minute interval and run until completion.

One way to improve performance on SQL Server is with IFI or Instant File Initialization.

Normally files are zeroed out on a database growth during an AUTOGROW, RESTORE, CREATE DATABASE or ALTER DATABASE. This is done by SQL Server when the file grows, it runs through that file and writes zeroes to the entire new allocation in the file. The zeroing process can take a great deal of time, the Instant file initialization process skips this zeroing, and just allocates the file. This works since SQL Server will just write each 8k page to disk as they are used, thus overwriting the uninitialized file.

Running some tests on a local virtual machine running SQL Server 2012 (similar results tested on SQL Server 2014 and SQL Server 2016), here is what I found.

For IFI to work, the user account that SQL Server is running as needs the “Perform volume maintenance tasks” policy to be enabled.Read More »Instant File Initialization (IFI)

Updating SQL Server statistics may not be as obvious as it may sound.

I magine this scenario. I invite you over for dinner and a game of Scrabble. After dinner, I start to do the dishes, while you set up the game. Rather than just doing the dishes that are dirty from our dinner, I decide to take all the dishes from the shelves, and drawers in my kitchen. I create a gigantic pile of all of the dishes, both clean and dirty on the kitchen counter. You get the game setup, and we start playing the game. Between turns, I jump back into the kitchen, and wash a few dishes, then on my turn, I jump back into the game and play my turn, then back to the kitchen again. During the game you are constantly waiting for me to jump back into the game to make my move. You could see how long this would draw out the game with me washing all the dishes, even the ones that were already clean. 4 hours later, I finally finish the dishes, and shortly thereafter we finish the game. It is unlikely, even if you love to play Scrabble that you would ever return to my house for dinner and a game again. Tomorrow night, when I have dinner with my family, I do the same thing, and every night after dinner I wash all the dishes in the kitchen, even the clean ones. You can see how wasteful this sounds, and perhaps some people would even label me as OCD or something worse in this scenario.

magine this scenario. I invite you over for dinner and a game of Scrabble. After dinner, I start to do the dishes, while you set up the game. Rather than just doing the dishes that are dirty from our dinner, I decide to take all the dishes from the shelves, and drawers in my kitchen. I create a gigantic pile of all of the dishes, both clean and dirty on the kitchen counter. You get the game setup, and we start playing the game. Between turns, I jump back into the kitchen, and wash a few dishes, then on my turn, I jump back into the game and play my turn, then back to the kitchen again. During the game you are constantly waiting for me to jump back into the game to make my move. You could see how long this would draw out the game with me washing all the dishes, even the ones that were already clean. 4 hours later, I finally finish the dishes, and shortly thereafter we finish the game. It is unlikely, even if you love to play Scrabble that you would ever return to my house for dinner and a game again. Tomorrow night, when I have dinner with my family, I do the same thing, and every night after dinner I wash all the dishes in the kitchen, even the clean ones. You can see how wasteful this sounds, and perhaps some people would even label me as OCD or something worse in this scenario.

Although this is obviously the wrong way to do things, often times we end up doing the equivalent of this in our SQL Server maintenance tasks.

The following are the steps that I went through to create a more difficult Database Corruption Challenge for Week 4.

Here is how I built the database, nothing fancy, however I did add an extra FILEGROUP called [UserObjects].

CREATE DATABASE [CorruptionChallenge4] ON PRIMARY ( NAME = N'CorruptionChallenge4', FILENAME = N'C:\SQL_DATA\CorruptionChallenge4.mdf', SIZE = 4288KB , MAXSIZE = UNLIMITED, FILEGROWTH = 1024KB ), FILEGROUP [UserObjects] DEFAULT ( NAME = N'UserObjects', FILENAME = N'C:\SQL_DATA\CorruptionChallenge4_UserObjects.ndf' , SIZE = 4096KB, MAXSIZE = UNLIMITED, FILEGROWTH = 1024KB ) LOG ON ( NAME = N'CorruptionChallenge4_log', FILENAME = N'C:\SQL_DATA\CorruptionChallenge4_log.ldf', SIZE = 1088KB, MAXSIZE = 2048GB, FILEGROWTH = 10%) COLLATE SQL_Latin1_General_CP1_CS_AS; GO

Notice, that to make things slightly more challenging, the database is a Case Sensitive Accent Sensitive database. I hope this didn’t cause too much trouble.

Next I created a couple of tables.

Here is a quick video training on how to use the T-SQL EOMONTH function on SQL Server. This was originally part of my free SQL query training… Read More »Using The TSQL EOMONTH Function

Here is a short video tutorial that shows how to use the CHOOSE function in T-SQL on SQL Server 2012, SQL Server 2014 or Newer. This… Read More »Using the TSQL CHOOSE Function

Using the GO commend in TSQL to run a batch of code multiple times is something that I commonly do in demo’s and training’s. What amazes… Read More »Executing a TSQL batch multiple times using GO

In my Common Table Expressions presentation the topic of recursion often comes up, but for scalar functions in T-SQL, it might not be as common.… Read More »Recursive Scalar Function in T-SQL