Building a SQL Restore Script When Your Backup Runs

It is handy to have a SQL Restore script ready to go for every backup that you run. Here is a script that will help… Read More »Building a SQL Restore Script When Your Backup Runs

It is handy to have a SQL Restore script ready to go for every backup that you run. Here is a script that will help… Read More »Building a SQL Restore Script When Your Backup Runs

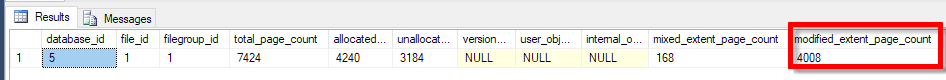

SQL Server 2017 introduces a new column in the sys.dm_db_file_space_usage system table. The column is modified_extent_page_count, which tells how many pages have been modified in your data file since the last full backup.

SELECT * FROM sys.dm_db_file_space_usage;

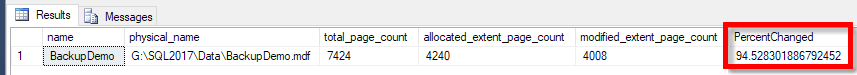

What is really interesting about this is that with a little math you can calculate the percentage of your data file that has been modified. This would be useful when running differential backups to be able to determine if it would make more sense to run a full backup or a differential backup. Basically when your differential backup gets large enough, based on the number of modified_extent_page_count pages, then it may make sense to do a full backup and reset this counter, and get back to smaller differential backups.

Here is an example

SELECT df.name, df.physical_name, total_page_count, allocated_extent_page_count, modified_extent_page_count, 100.0 * modified_extent_page_count / allocated_extent_page_count as PercentChanged FROM sys.dm_db_file_space_usage fsu INNER JOIN sys.database_files df on df.file_id = fsu.file_id;

Now based on the Percent Changed column we can make some assumptions on the size of the differential backup, and decide if we want to do a differential backup or a full backup.

Read More »Determining how much of your data file has been modified

From time to time I get asked about checkDB, and there are many solutions out there, but I have one that I generally use that is very simple and does the job.

The script below created a stored procedure in the DBHealthHistory database that can be used to check as many databases as you can get through in a specific time interval. Here it is set to 5 minutes, but that usually gets extended for large databases. If you set the job to daily, and the job doesn’t get through checking all the databases today, it will pick up where it left off and check the rest tomorrow.

Depending on the number and size of your databases you may want to run this more than once a day, or for a longer period than the 5 minutes.

Note: the 5 minute limitation is checked before starting the next check, so if you have a database that takes hours to check, that will kick off in the 5 minute interval and run until completion.

CREATE PROCEDURE #MyProcedureName

Yes, you can create temporary stored procedures by prefixing the name of the sproc with a #. The temporary stored procedure is sort of a “leave no trace” stored procedure that is only good for your session. If you use double ## you will get a global temporary stored procedure.

For instance:

The question of how to delete a trigger after a specific date came up. Here is what could be done. If you want to… Read More »Drop a trigger after a specific date

Updating SQL Server statistics may not be as obvious as it may sound.

I magine this scenario. I invite you over for dinner and a game of Scrabble. After dinner, I start to do the dishes, while you set up the game. Rather than just doing the dishes that are dirty from our dinner, I decide to take all the dishes from the shelves, and drawers in my kitchen. I create a gigantic pile of all of the dishes, both clean and dirty on the kitchen counter. You get the game setup, and we start playing the game. Between turns, I jump back into the kitchen, and wash a few dishes, then on my turn, I jump back into the game and play my turn, then back to the kitchen again. During the game you are constantly waiting for me to jump back into the game to make my move. You could see how long this would draw out the game with me washing all the dishes, even the ones that were already clean. 4 hours later, I finally finish the dishes, and shortly thereafter we finish the game. It is unlikely, even if you love to play Scrabble that you would ever return to my house for dinner and a game again. Tomorrow night, when I have dinner with my family, I do the same thing, and every night after dinner I wash all the dishes in the kitchen, even the clean ones. You can see how wasteful this sounds, and perhaps some people would even label me as OCD or something worse in this scenario.

magine this scenario. I invite you over for dinner and a game of Scrabble. After dinner, I start to do the dishes, while you set up the game. Rather than just doing the dishes that are dirty from our dinner, I decide to take all the dishes from the shelves, and drawers in my kitchen. I create a gigantic pile of all of the dishes, both clean and dirty on the kitchen counter. You get the game setup, and we start playing the game. Between turns, I jump back into the kitchen, and wash a few dishes, then on my turn, I jump back into the game and play my turn, then back to the kitchen again. During the game you are constantly waiting for me to jump back into the game to make my move. You could see how long this would draw out the game with me washing all the dishes, even the ones that were already clean. 4 hours later, I finally finish the dishes, and shortly thereafter we finish the game. It is unlikely, even if you love to play Scrabble that you would ever return to my house for dinner and a game again. Tomorrow night, when I have dinner with my family, I do the same thing, and every night after dinner I wash all the dishes in the kitchen, even the clean ones. You can see how wasteful this sounds, and perhaps some people would even label me as OCD or something worse in this scenario.

Although this is obviously the wrong way to do things, often times we end up doing the equivalent of this in our SQL Server maintenance tasks.

The Optimize for Ad Hoc Workloads server configuration can improve performance, and is extremely unlikely to negatively impact performance.

This was a new feature that was introduced in SQL Server 2008, and as with many new features in SQL Server, it is not turned on by default.

Without the Optimize for Ad Hoc Workloads feature enabled, when a plan is compiled, it is kept around in memory (cached) until something else pushes it out of the cache.

With the Optimize for Ad Hoc Workloads feature enabled, the first time a plan is compiled, it is not kept around in cache, rather a stub is kept around to indicate that they query has previously been complied. The second time the plan is compiled it is kept around in memory. Over time you end up with fewer one time use queries in memory, reducing the memory impact of the plan cache. All other multi-use ad hoc plans will need to be compiled twice on the first and second time that they are used.

The Optimize for Ad Hoc Workloads server configuration doesn’t change the size of the plan cache in SQL Server, it does however prevent the single use ad hoc plans from using up plan cache space, making more space for other cached plans.

The alternate solution to the Database Corruption Challenge this week was created by Patrick Flynn. This solution is the only solution to successfully recover all the data without using any of the backups. If the challenge had been structured differently and all you had was just the database files and no backups, this solution would work just fine.

As you read through this solution, you will notice the use of Common Table Expressions throughout the solution. Patrick is the first person that I have seen write TSQL code that uses Common Table Expressions more than I do. (I mean that as a compliment Patrick)

To start with let’s add the UDF_Convert_Hex_to_Binary function into the master database to match what Patrick had in his system.

All code provided by Patrick, comments and screenshots by me.

-- Step 0 - Create Supporting function

USE [master]

GO

CREATE FUNCTION [dbo].[UDF_Convert_Hex_to_Binary]

(

@HEX VARBINARY(MAX)

)

RETURNS VARCHAR(MAX)

BEGIN

DECLARE @BINARY VARCHAR(MAX)

;WITH N1 (n) AS (SELECT 1 UNION ALL SELECT 1),

N2 (n) AS (SELECT 1 FROM N1 AS X, N1 AS Y),

N3 (n) AS (SELECT 1 FROM N2 AS X, N2 AS Y),

N4 (n) AS (SELECT ROW_NUMBER() OVER(ORDER BY X.n)

FROM N3 AS X, N3 AS Y)

SELECT @BINARY=ISNULL(@BINARY,'')

+ CONVERT(NVARCHAR(1), (SUBSTRING(@HEX, Nums.n, 1) / 128) % 2)

+ CONVERT(NVARCHAR(1), (SUBSTRING(@HEX, Nums.n, 1) / 64) % 2)

+ CONVERT(NVARCHAR(1), (SUBSTRING(@HEX, Nums.n, 1) / 32) % 2)

+ CONVERT(NVARCHAR(1), (SUBSTRING(@HEX, Nums.n, 1) / 16) % 2)

+ CONVERT(NVARCHAR(1), (SUBSTRING(@HEX, Nums.n, 1) / 8) % 2)

+ CONVERT(NVARCHAR(1), (SUBSTRING(@HEX, Nums.n, 1) / 4) % 2)

+ CONVERT(NVARCHAR(1), (SUBSTRING(@HEX, Nums.n, 1) / 2) % 2)

+ CONVERT(NVARCHAR(1), SUBSTRING(@HEX, Nums.n, 1) % 2)

FROM N4 Nums

WHERE Nums.n<=LEN(@HEX)

RETURN @BINARY

END

GO

Once that function is created, let’s drop the previous CorruptionChallenge7 database, because this is probably not our first time running through the solution. If you have a different way of checking for a database and dropping it, please see my blog post related to this topic earlier today.

Read More »Database Corruption Challenge Week 7 – Alternate Solution

How do you check if a database exists, drop it and recreate it for testing purposes? After running the Database Corruption Challenge for several weeks… Read More »How Do You Check if a Database Exists…

It is my pleasure to announce the winners in the Week 4 Database Corruption Challenge. But first how about a rundown of challenge.

In order to make up for the difficult time that Challenge #3 was issued, Challenge #4 was issued at 2:00pm pacific time on a Friday afternoon. Those who had signed up for my Newsletter received early notification of when the corruption challenge would occur, but not details on what the challenge would be. When I launched it, I ran into a couple technical details, it appears that my WordPress site that hosts this blog was having difficulty with attachments larger that 8mb. So I ended up having to upload the file to another site where Database Health Monitor is hosted where the larger file was accepted. This delayed the start of the challenge about 20 minutes.

After the challenge released, it was quiet, almost to quiet. Then about 40 minutes into it, Randolph West messaged me on twitter to say that he was on it, and that he had discovered the CDC (Change Data Capture) tables. He also mentioned that he had dinner plans. He kept working on it, and at 4:55pm (pacific time) he provided me with proof that he had completed the challenge, however it wasn’t until 5:06pm (pacific time), that he provided me the full script showing his solution. At that point Randolph was the winner of the Week 4 Database Corruption Challenge. Two hours and 46 minutes after the challenge was able to be downloaded, Randolph had won the competition.