Building a SQL Restore Script When Your Backup Runs

It is handy to have a SQL Restore script ready to go for every backup that you run. Here is a script that will help… Read More »Building a SQL Restore Script When Your Backup Runs

It is handy to have a SQL Restore script ready to go for every backup that you run. Here is a script that will help… Read More »Building a SQL Restore Script When Your Backup Runs

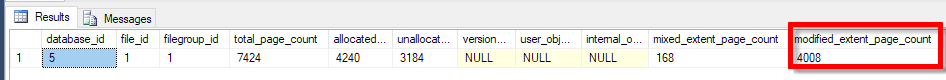

SQL Server 2017 introduces a new column in the sys.dm_db_file_space_usage system table. The column is modified_extent_page_count, which tells how many pages have been modified in your data file since the last full backup.

SELECT * FROM sys.dm_db_file_space_usage;

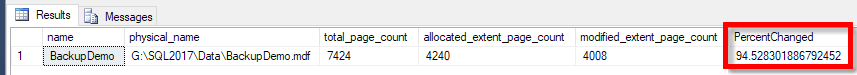

What is really interesting about this is that with a little math you can calculate the percentage of your data file that has been modified. This would be useful when running differential backups to be able to determine if it would make more sense to run a full backup or a differential backup. Basically when your differential backup gets large enough, based on the number of modified_extent_page_count pages, then it may make sense to do a full backup and reset this counter, and get back to smaller differential backups.

Here is an example

SELECT df.name, df.physical_name, total_page_count, allocated_extent_page_count, modified_extent_page_count, 100.0 * modified_extent_page_count / allocated_extent_page_count as PercentChanged FROM sys.dm_db_file_space_usage fsu INNER JOIN sys.database_files df on df.file_id = fsu.file_id;

Now based on the Percent Changed column we can make some assumptions on the size of the differential backup, and decide if we want to do a differential backup or a full backup.

Read More »Determining how much of your data file has been modified

From time to time I get asked about checkDB, and there are many solutions out there, but I have one that I generally use that is very simple and does the job.

The script below created a stored procedure in the DBHealthHistory database that can be used to check as many databases as you can get through in a specific time interval. Here it is set to 5 minutes, but that usually gets extended for large databases. If you set the job to daily, and the job doesn’t get through checking all the databases today, it will pick up where it left off and check the rest tomorrow.

Depending on the number and size of your databases you may want to run this more than once a day, or for a longer period than the 5 minutes.

Note: the 5 minute limitation is checked before starting the next check, so if you have a database that takes hours to check, that will kick off in the 5 minute interval and run until completion.

CREATE PROCEDURE #MyProcedureName

Yes, you can create temporary stored procedures by prefixing the name of the sproc with a #. The temporary stored procedure is sort of a “leave no trace” stored procedure that is only good for your session. If you use double ## you will get a global temporary stored procedure.

For instance:

The question of how to delete a trigger after a specific date came up. Here is what could be done. If you want to… Read More »Drop a trigger after a specific date

How do you check if a database exists, drop it and recreate it for testing purposes? After running the Database Corruption Challenge for several weeks… Read More »How Do You Check if a Database Exists…

It is my pleasure to announce the winners in the Week 4 Database Corruption Challenge. But first how about a rundown of challenge.

In order to make up for the difficult time that Challenge #3 was issued, Challenge #4 was issued at 2:00pm pacific time on a Friday afternoon. Those who had signed up for my Newsletter received early notification of when the corruption challenge would occur, but not details on what the challenge would be. When I launched it, I ran into a couple technical details, it appears that my WordPress site that hosts this blog was having difficulty with attachments larger that 8mb. So I ended up having to upload the file to another site where Database Health Monitor is hosted where the larger file was accepted. This delayed the start of the challenge about 20 minutes.

After the challenge released, it was quiet, almost to quiet. Then about 40 minutes into it, Randolph West messaged me on twitter to say that he was on it, and that he had discovered the CDC (Change Data Capture) tables. He also mentioned that he had dinner plans. He kept working on it, and at 4:55pm (pacific time) he provided me with proof that he had completed the challenge, however it wasn’t until 5:06pm (pacific time), that he provided me the full script showing his solution. At that point Randolph was the winner of the Week 4 Database Corruption Challenge. Two hours and 46 minutes after the challenge was able to be downloaded, Randolph had won the competition.

The following are the steps that I went through to create a more difficult Database Corruption Challenge for Week 4.

Here is how I built the database, nothing fancy, however I did add an extra FILEGROUP called [UserObjects].

CREATE DATABASE [CorruptionChallenge4] ON PRIMARY ( NAME = N'CorruptionChallenge4', FILENAME = N'C:\SQL_DATA\CorruptionChallenge4.mdf', SIZE = 4288KB , MAXSIZE = UNLIMITED, FILEGROWTH = 1024KB ), FILEGROUP [UserObjects] DEFAULT ( NAME = N'UserObjects', FILENAME = N'C:\SQL_DATA\CorruptionChallenge4_UserObjects.ndf' , SIZE = 4096KB, MAXSIZE = UNLIMITED, FILEGROWTH = 1024KB ) LOG ON ( NAME = N'CorruptionChallenge4_log', FILENAME = N'C:\SQL_DATA\CorruptionChallenge4_log.ldf', SIZE = 1088KB, MAXSIZE = 2048GB, FILEGROWTH = 10%) COLLATE SQL_Latin1_General_CP1_CS_AS; GO

Notice, that to make things slightly more challenging, the database is a Case Sensitive Accent Sensitive database. I hope this didn’t cause too much trouble.

Next I created a couple of tables.

Here is a quick video tutorial on how to use the IIF function on SQL Server 2012, SQL Server 2014 0r newer. This was originally part… Read More »Using the TSQL IIF Function

Here is our simple cursor example showing both FAST_FORWARD and FORWARD_ONLY cursors. A FAST_FORWARD cursor in SQL Server is a type of forward-only, read-only cursor… Read More »Simple Cursor Example : FORWARD_ONLY vs FAST FORWARD