DBCC CleanTable

Being day eight of the DBCC Command month at SteveStedman.com, today’s featured DBCC Command is DBCC CLEANTABLE.

Many times I have worked on a database that has evolved over 10 or more years of changes from different developers and DBAs. One of the signs I see in databases like this is the waste associated with obsolete columns. For instance someone had an email address column as a varchar(1024) just to have plenty of space, then someone decided to do some normalization and they created a table just to hold email addresses, and the original table only had a id to represent the email address that was foreign keyed to the new table. In a good case the developer was wise enough to remove the original column, but not always. Assume the case where it wasn’t delete, so we do a check, determine that the old varchar(1024) column is not being used so we drop the column. After dropping the column we notice that the table didn’t get any smaller. Thats OK, maybe we will just use that space that we freed up from the column we dropped over time as the table grows, but NO. The space associated with that column is left around.

So to actually free up that space associated with the dropped columns so that it can be used there are 2 common options.

- DBCC CleanTable

- Rebuild the clustered index

The purpose of this post is to demonstrate how DBCC CleanTable can be used to regain wasted space.

Description:

DBCC CleanTable is used for clean up waste left around by deleting variable length columns.

DBCC CLEANTABLE Syntax:

dbcc cleantable

(

{ 'database_name' | database_id | 0 }

, { 'table_name' | table_id | 'view_name' | view_id }

[ , batch_size ]

)

[ WITH NO_INFOMSGS ]

The Third Argument is Batch size: This is the number of rows processed in one transaction. If not specified or if value is 0, it means all rows of table will be in 1 transaction. For larger tables it may make sense to process parts of the table in smaller batches.

Example:

First we will create a table to use for the following example

CREATE TABLE [LotsOfWaste] ( id int, --would normally be an INT IDENTITY firstName VARCHAR (8000), MiddleName VARCHAR (8000), lastName VARCHAR (8000), SocialSecurityNumber VARCHAR (8000), FavoriteFood VARCHAR (8000), FavoriteColor VARCHAR (8000), archived bit default 0 );

First we will fill up the table with 10, 000 rows using the GO statement to repeat our INSERT statement 10,000 times.

GO

set nocount on;

INSERT INTO [dbo].[LotsOfWaste]

([firstName]

,[MiddleName]

,[lastName]

,[SocialSecurityNumber]

,[FavoriteFood]

,[FavoriteColor]

,[archived])

VALUES (

REPLICATE('1234567890ABCDEFGHIJKLMNOPQRSTUVWXYZ', cast(rand() * 10 as int))

,REPLICATE('1234567890ABCDEFGHIJKLMNOPQRSTUVWXYZ', cast(rand() * 10 as int))

,REPLICATE('1234567890ABCDEFGHIJKLMNOPQRSTUVWXYZ', cast(rand() * 10 as int))

,REPLICATE('1234567890ABCDEFGHIJKLMNOPQRSTUVWXYZ', cast(rand() * 10 as int))

,REPLICATE('1234567890ABCDEFGHIJKLMNOPQRSTUVWXYZ', cast(rand() * 10 as int))

,REPLICATE('1234567890ABCDEFGHIJKLMNOPQRSTUVWXYZ', cast(rand() * 10 as int))

,1)

GO 10000

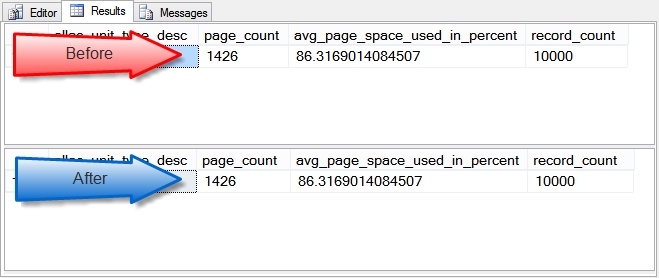

Now to check on the number of pages allocated for this table, and the percentage used. Below we see that there are 1426 page allocated, and of the memory associated with those 1426 pages, 86.3% of that memory is used to accomodate the 10,000 records.

SELECT alloc_unit_type_desc, page_count,

avg_page_space_used_in_percent, record_count

FROM sys.dm_db_index_physical_stats( DB_ID('dbcc_corruption'),

OBJECT_ID(N'LotsOfWaste'),

NULL,NULL,'Detailed')

Now lets drop 4 columns from the table, and take a look at the pages allocated and the space used.

SELECT alloc_unit_type_desc, page_count,

avg_page_space_used_in_percent, record_count

FROM sys.dm_db_index_physical_stats( DB_ID('dbcc_corruption'),

OBJECT_ID(N'LotsOfWaste'),

NULL,NULL,'Detailed')

ALTER TABLE [dbo].[LotsOfWaste] DROP COLUMN [FavoriteColor];

ALTER TABLE [dbo].[LotsOfWaste] DROP COLUMN [FavoriteFood];

ALTER TABLE [dbo].[LotsOfWaste] DROP COLUMN [SocialSecurityNumber];

ALTER TABLE [dbo].[LotsOfWaste] DROP COLUMN [MiddleName];

SELECT alloc_unit_type_desc, page_count,

avg_page_space_used_in_percent, record_count

FROM sys.dm_db_index_physical_stats( DB_ID('dbcc_corruption'),

OBJECT_ID(N'LotsOfWaste'),

NULL,NULL,'Detailed')

The number of pages, percent used, and record count are exactly the same before and after deleting the columns. This table is not using any less space now that those 4 large columns were deleted.

So maybe we can just insert more rows and that will take advantage of the space that was being used by the 4 deleted columns. Perhaps…?

SELECT alloc_unit_type_desc, page_count,

avg_page_space_used_in_percent, record_count

FROM sys.dm_db_index_physical_stats( DB_ID('dbcc_corruption'),

OBJECT_ID(N'LotsOfWaste'),

NULL,NULL,'Detailed')

GO

INSERT INTO [dbo].[LotsOfWaste]

([firstName]

,[lastName]

,[archived])

VALUES (

REPLICATE('1234567890ABCDEFGHIJKLMNOPQRSTUVWXYZ', cast(rand() * 10 as int))

,REPLICATE('1234567890ABCDEFGHIJKLMNOPQRSTUVWXYZ', cast(rand() * 10 as int))

,1)

GO 100

SELECT alloc_unit_type_desc, page_count,

avg_page_space_used_in_percent, record_count

FROM sys.dm_db_index_physical_stats( DB_ID('dbcc_corruption'),

OBJECT_ID(N'LotsOfWaste'),

NULL,NULL,'Detailed')

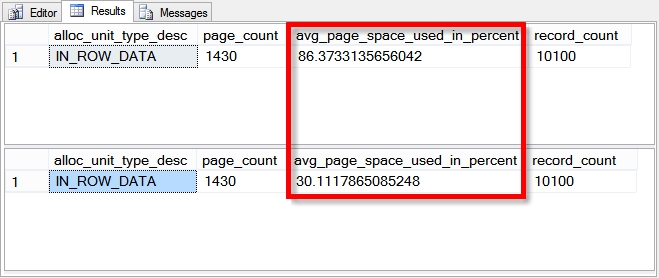

No, inserting more rows, even rows that are smaller than the original rows does not make use of the space left behind by the column removal. In this case inserting 100 rows ended up allocating another 4 data pages.

So where do we go from here, how do we reclaim the space associated with the columns that were deleted? Now we will try DBCC CleanTable since that is the topic of this posting. Lets see what happens.

SELECT alloc_unit_type_desc, page_count,

avg_page_space_used_in_percent, record_count

FROM sys.dm_db_index_physical_stats( DB_ID('dbcc_corruption'),

OBJECT_ID(N'LotsOfWaste'),

NULL,NULL,'Detailed')

DBCC CleanTable(dbcc_corruption, LotsOfWaste);

SELECT alloc_unit_type_desc, page_count,

avg_page_space_used_in_percent, record_count

FROM sys.dm_db_index_physical_stats( DB_ID('dbcc_corruption'),

OBJECT_ID(N'LotsOfWaste'),

NULL,NULL,'Detailed')

Here we see that the number of pages for the table hasn’t changed, and the record count hasn’t changed, but the average spaced used has changed from 86% to 30%.

There are still the same number of pages, but can we actually use those pages? Lets give it a try. Here we will insert 100 rows, and see if the page count grows as we saw it grow earlier…

SELECT alloc_unit_type_desc, page_count,

avg_page_space_used_in_percent, record_count

FROM sys.dm_db_index_physical_stats( DB_ID('dbcc_corruption'),

OBJECT_ID(N'LotsOfWaste'),

NULL,NULL,'Detailed')

GO

INSERT INTO [dbo].[LotsOfWaste]

([firstName]

,[lastName]

,[archived])

VALUES (

REPLICATE('1234567890ABCDEFGHIJKLMNOPQRSTUVWXYZ', cast(rand() * 10 as int))

,REPLICATE('1234567890ABCDEFGHIJKLMNOPQRSTUVWXYZ', cast(rand() * 10 as int))

,1)

GO 100

SELECT alloc_unit_type_desc, page_count,

avg_page_space_used_in_percent, record_count

FROM sys.dm_db_index_physical_stats( DB_ID('dbcc_corruption'),

OBJECT_ID(N'LotsOfWaste'),

NULL,NULL,'Detailed')

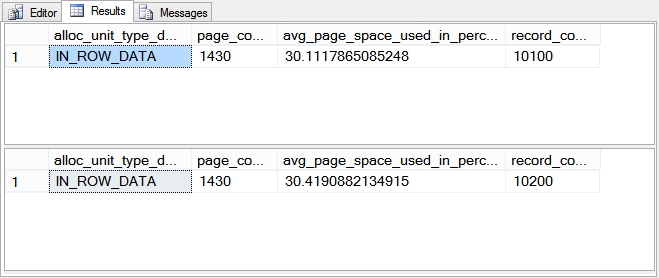

Here we see that the page count has not increased from the before to the after check, the record count has increased due to the 100 rows inserted, and the average spaced used has changed slightly.

What we did here was insert those last 100 rows into space that was not usable prior to running DBCC CleanTable. By cleaning up a table this way we don’t decrease the overall number of pages, the size of the table, but we do allow for possibly many more rows to be inserted into the space that has been allocated.

Warning:

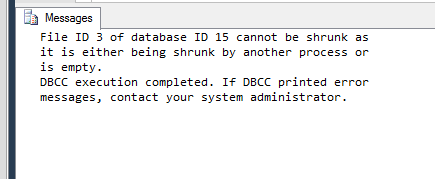

DBCC CleanTable for large tables may cause the transaction log to grow.

Notes:

DBCC CleanTable is doesn’t work on system tables or temporary tables.

For more information see TSQL Wiki DBCC cleantable.

DBCC Command month at SteveStedman.com is almost as much fun as looking at paint swatches at the home improvement store.

More from Stedman Solutions:

Steve and the team at Stedman Solutions are here for all your SQL Server needs.

Contact us today for your free 30 minute consultation..

We are ready to help!

Leave a Reply